Unsolved Problems in Blockchain Sharding

In the first part of the series we provided motivation for blockchain sharding and discussed some core concepts. In this post we will discuss some more advanced aspects of sharding, including its two biggest unsolved challenges: data availability and data validity.

Intro

The core idea in sharded blockchains is that most participants operating or using the network cannot validate blocks in all the shards. As such, whenever any participant needs to interact with a particular shard they generally cannot download and validate the entire history of the shard.

The partitioning aspect of sharding, however, raises a significant potential problem: without downloading and validating the entire history of a particular shard the participant cannot necessarily be certain that the state with which they interact is the result of some valid sequence of blocks and that such sequence of blocks is indeed the canonical chain in the shard. A problem that doesn’t exist in a non-sharded blockchain.

We will first present a simple solution to this problem that has been proposed by many protocols and then analyze how this solution can break and what attempts have been made to address it.

The supposed simple solution

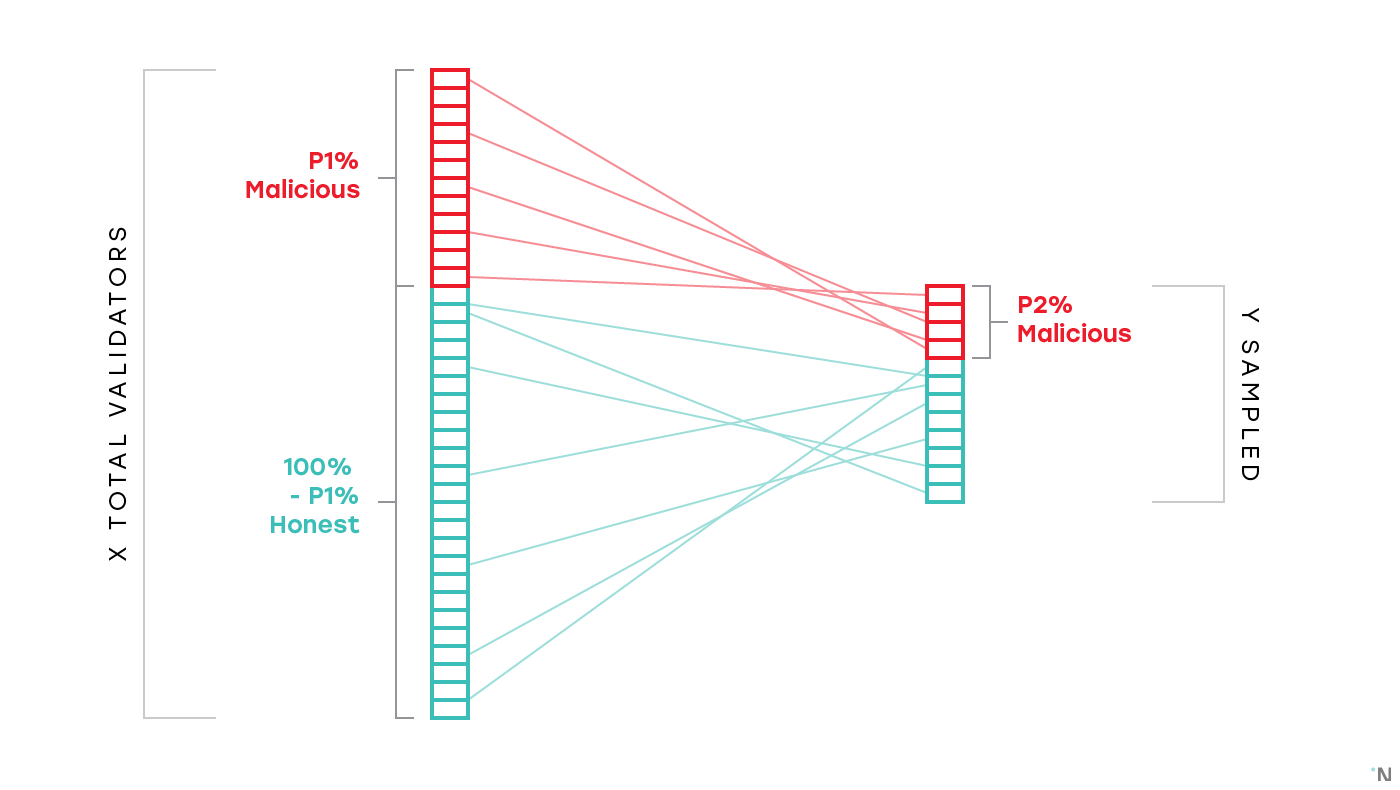

The naive solution to data validity is the following: let’s say we assume that the entire system has on the order of thousands validators, out of which no more than 20% are malicious or will otherwise fail (such as by failing to be online to produce a block). Then if we sample ~200 validators, the probability of more than ⅓ failing for practical purposes can be assumed to be zero.

⅓ is an important threshold. There’s a family of consensus protocols, called BFT consensus protocols, that guarantees that for as long as fewer than ⅓ of participants fail, either by crashing or by acting in some way that violates the protocol, the consensus will be reached.

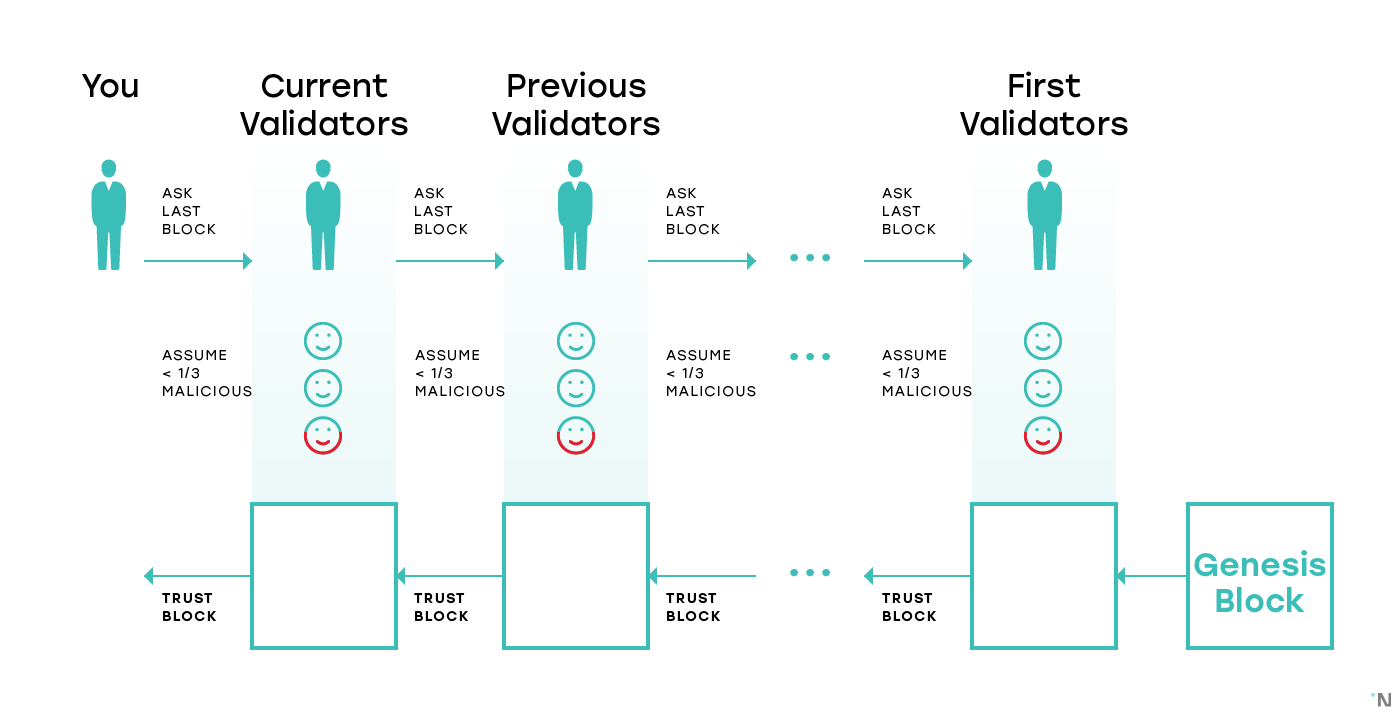

With this assumption of honest validator percentage, if the current set of validators in a shard provides us with some block, the naive solution assumes that the block is valid and that it is built on what the validators believed to be the canonical chain for that shard when they started validating. The validators learned the canonical chain from the previous set of validators, who by the same assumption built on top of the block which was the head of the canonical chain before that. By induction the entire chain is valid, and since no set of validators at any point produced forks, the naive solution is also certain that the current chain is the only chain in the shard.

This simple solution doesn’t work if we assume that the validators can be corrupted adaptively, which is not an unreasonable assumption (see here to learn more about adaptive corruption). Adaptively corrupting a single shard in a system with 1000 shards is significantly cheaper than corrupting the entire system. Therefore, the security of the protocol decreases linearly with the number of shards. To have certainty in the validity of a block, we must know that at any point in history no shard in the system has a majority of validators colluding; with adaptive adversaries, we no longer have certainty. As we discussed in the previous part, colluding validators can exercise two basic malicious behaviors: create forks, and produce invalid blocks.

Malicious forks can be addressed by blocks being cross-linked to the Beacon chain that is generally designed to have significantly higher security than the shard chains. Producing invalid blocks, however, is a significantly more challenging problem to tackle.

Data Validity

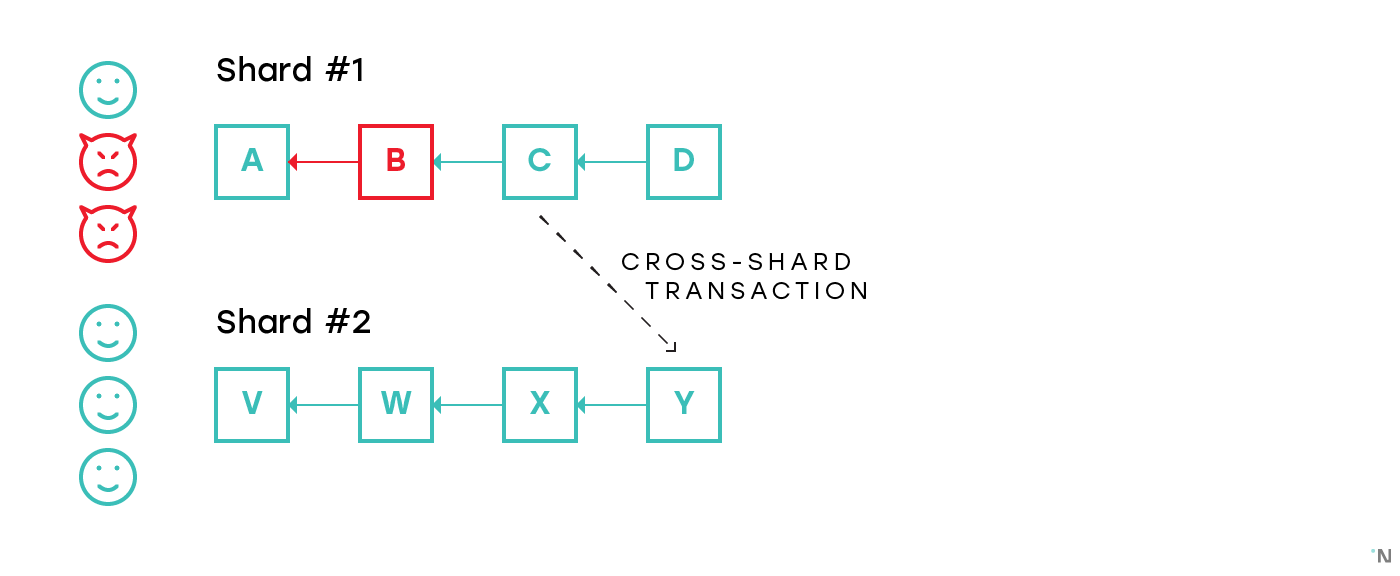

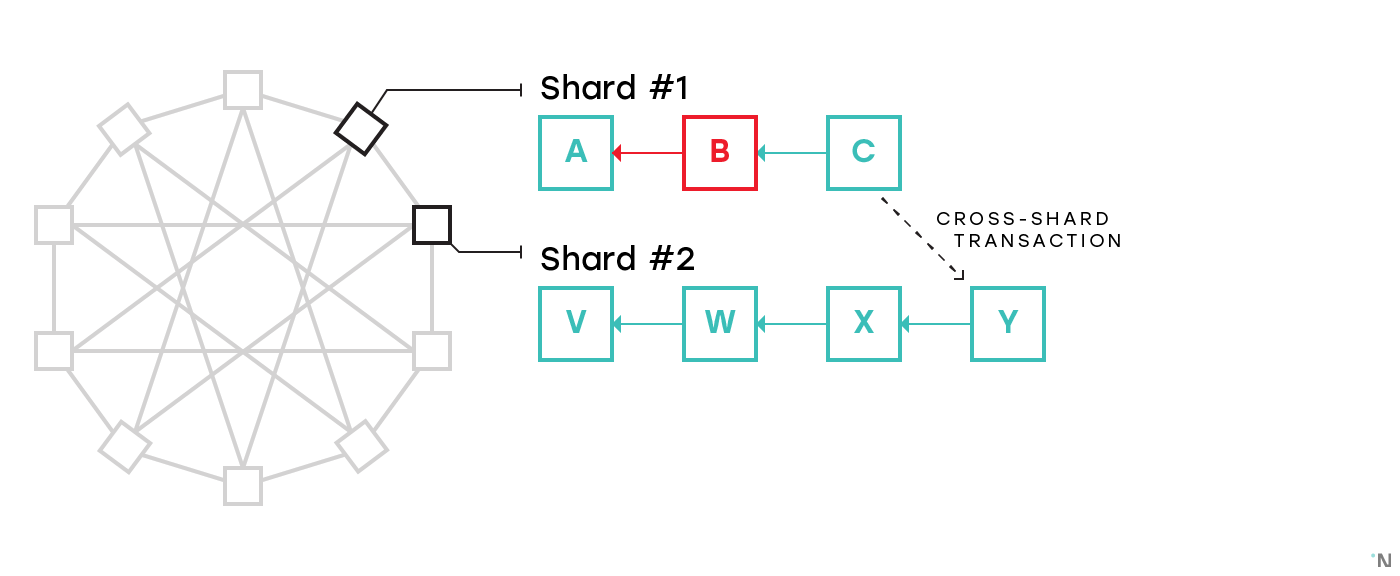

Consider the following figure on which Shard #1 is corrupted and a malicious actor produces invalid block B. Suppose in this block B 1000 tokens were minted out of thin air on Alice’s account. The malicious actor then produces valid block C (in a sense that the transactions in C are applied correctly) on top of B, obfuscating the invalid block B, and initiates a cross-shard transaction to Shard #2 that transfers those 1000 tokens to Bob’s account. From this moment the improperly created tokens reside on an otherwise completely valid blockchain in Shard #2.

Some simple approaches to tackle this problem are:

- For validators of Shard #2 to validate the block from which the transaction is initiated. This won’t work even in the example above, since block C appears to be completely valid.

- For validators in Shard #2 to validate some large number of blocks preceding the block from which the transaction is initiated. Naturally, for any number of blocks N validated by the receiving shard the malicious validators can create N+1 valid blocks on top of the invalid block they produced.

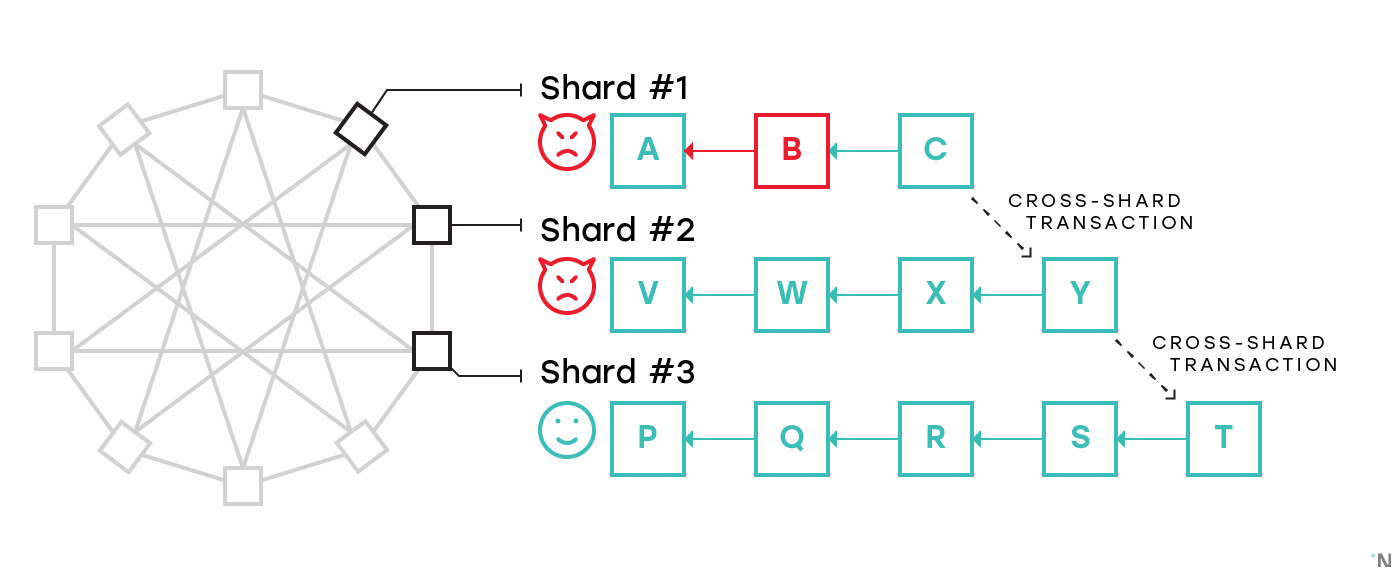

A promising idea to resolve this issue would be to arrange shards into an undirected graph in which each shard is connected to several other shards, and only allow cross-shard transactions between neighboring shards (e.g. this is how Vlad Zamfir’s sharding essentially works, and similar idea is used in Kadena’s Chainweb). If a cross-shard transaction is needed between shards that are not neighbors, such transaction is routed through multiple shards. In this design a validator in each shard is expected to validate both all the blocks in their shard as well as all the blocks in all the neighboring shards. Consider a figure below with 10 shards, each having four neighbors, and no two shards requiring more than two hops for a cross-shard communication:

Shard #2 is not only validating its own blockchain, but also blockchains of all the neighbors, including Shard #1. So if a malicious actor on Shard #1 is attempting to create an invalid block B, then build block C on top of it and initiate a cross-shard transaction, such cross-shard transaction will not go through since Shard #2 will have validated the entire history of Shard #1 which will cause it to identify invalid block B.

While corrupting a single shard is no longer a viable attack, corrupting a few shards remains a problem. On the following figure an adversary corrupting both Shard #1 and Shard #2 successfully executes a cross-shard transaction to Shard #3 with funds from an invalid block B:

Shard #3 validates all the blocks in Shard #2, but not in Shard #1, and has no way to detect the malicious block.

There are two major directions of properly solving data validity: fishermen and cryptographic proofs of computation.

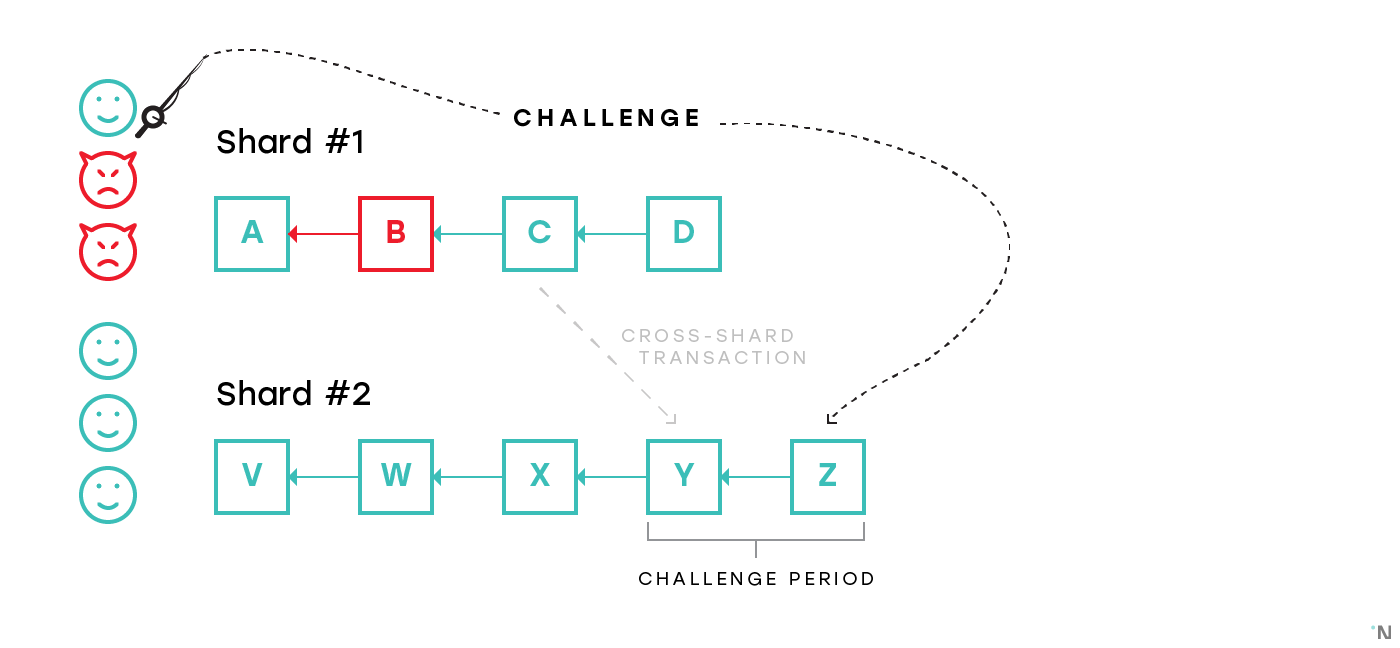

Fisherman

The idea behind the first approach is the following: whenever a block header is communicated between chains for any purpose (such as cross-linking to the beacon chain, or a cross-shard transaction), there’s a period of time during which any honest validator can provide a proof that the block is invalid. There are various constructions that enable very succinct proofs that the blocks are invalid, so the communication overhead for the receiving nodes is way smaller than that of receiving a full block.

With this approach for as long as there’s at least one honest validator in the shard, the system is secure.

This is the dominant approach (besides pretending the problem doesn’t exist) among the proposed protocols today. This approach, however, has two major disadvantages:

- The challenge period needs to be sufficiently long for the honest validator to recognize a block was produced, download it, fully verify it, and prepare the challenge if the block is invalid. Introducing such a period would significantly slow down the cross-shard transactions.

- The existence of the challenge protocol creates a new vector of attacks when malicious nodes spam with invalid challenges. An obvious solution to this problem is to make challengers deposit some amount of tokens that are returned if the challenge is valid. This is only a partial solution, as it might still be beneficial for the adversary to spam the system (and burn the deposits) with invalid challenges, for example to prevent the valid challenge from a honest validator from going through. These attacks are called Griefing Attacks.

Neither of the fisherman’s two problems has a satisfactory solution, but using fisherman is still strictly better than having the possibility of an invalid block being finalized.

Succinct Non-interactive Arguments of Knowledge

The second solution to multiple-shard corruption is to use some sort of cryptographic constructions that allow one to prove that a certain computation (such as computing a block from a set of transactions) was carried out correctly. Such constructions do exist, e.g. zk-SNARKs, zk-STARKs and a few others, and some are actively used in blockchain protocols today for private payments, most notably ZCash. The primary problem with such primitives is that they are notoriously slow to compute. E.g. Coda Protocol, that uses zk-SNARKs specifically to prove that all the blocks in the blockchain are valid, said in one of the interviews that it can take 30 seconds per transaction to create a proof (this number is probably smaller by now).

Interestingly, a proof doesn’t need to be computed by a trusted party, since the proof not only attests to the validity of the computation it is built for, but to the validity of the proof itself. Thus, the computation of such proofs can be split among a set of participants with significantly less redundancy than would be necessary to perform some trustless computation. It also allows for participants who compute zk-SNARKs to run on special hardware without reducing the decentralization of the system.

The challenges of zk-SNARKs, besides performance, are:

- Dependency on less-researched and less-time-tested cryptographic primitives;

- “Toxic waste” — zk-SNARKs depend on a trusted setup in which a group of people performs some computation and then discards the intermediate values of that computation. If all the participants of the procedure collude and keep the intermediate values, fake proofs can be created;

- Extra complexity introduced into the system design;

- zk-SNARKs only work for a subset of possible computations, so a protocol with a Turing-complete smart contract language wouldn’t be able to use SNARKs to prove the validity of the chain.

While many protocols are looking into using zk-SNARKs long term, I do not know any planning to launch with them besides Coda.

Data Availability

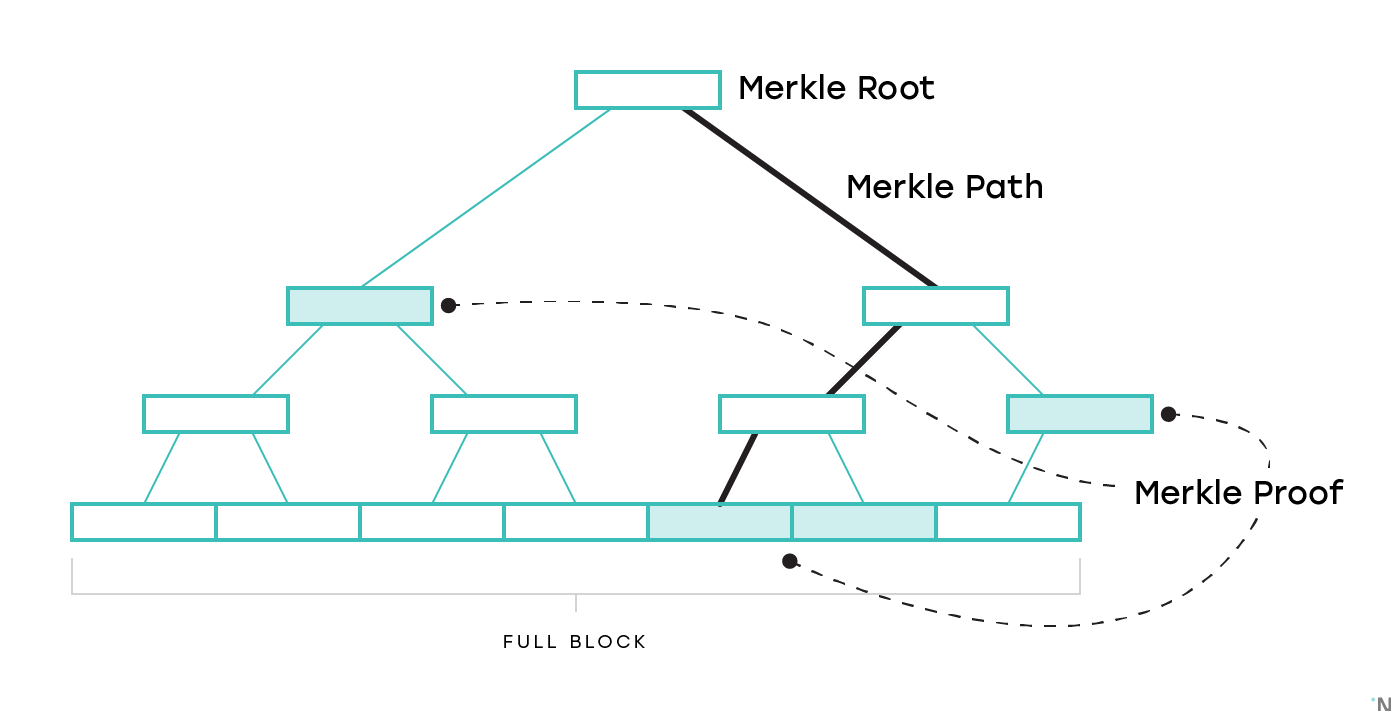

The second problem we will touch upon is data availability. Generally nodes operating a particular blockchain are separated into two groups: Full Nodes, those that download every full block and validate every transaction, and Light Nodes, those that only download block headers, and use Merkle proofs for parts of the state and transactions they are interested in.

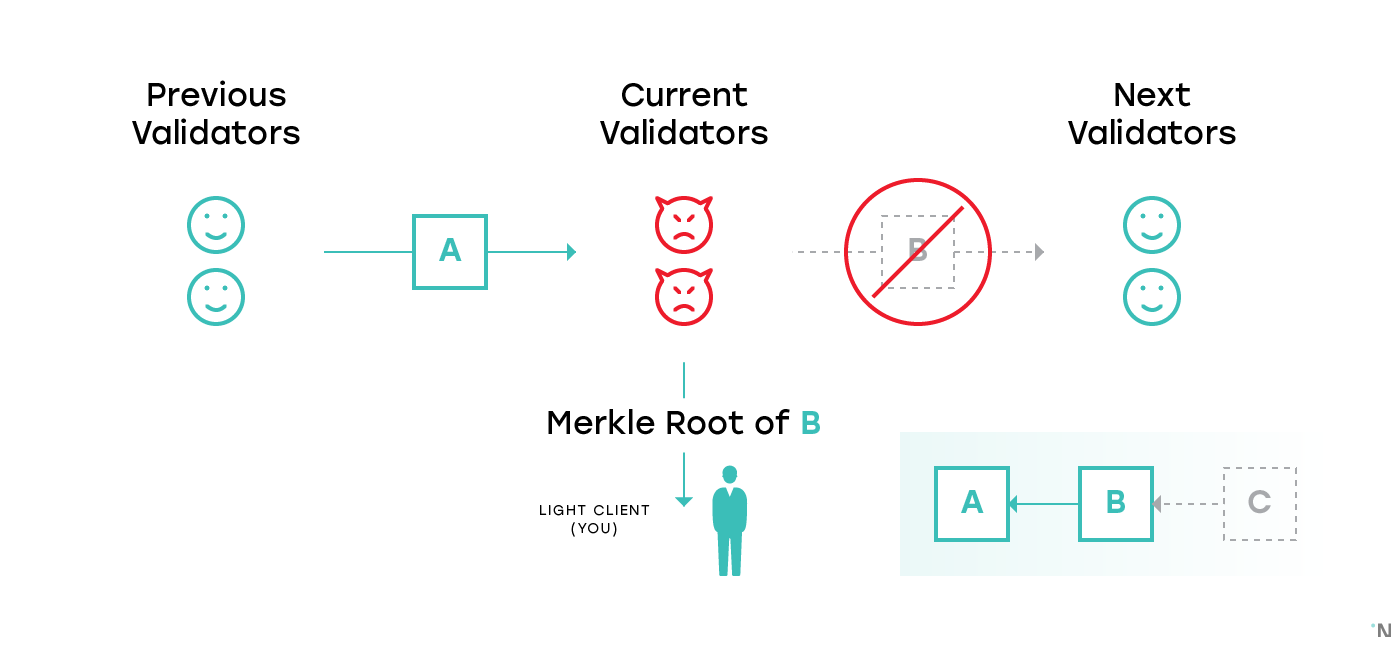

Now if a majority of full nodes collude, they can produce a block, valid or invalid, and send its hash to the light nodes, but never disclose the full content of the block. There are various ways they can benefit from it. For example, consider the figure below:

There are three blocks: the previous, A, is produced by honest validators; the current, B, has validators colluding; and the next, C, will be also produced by honest validators (the blockchain is depicted in the bottom right corner).

You are a merchant. The validators of the current block (B) received block A from the previous validators, computed a block in which you receive money, and sent you a header of that block with a Merkle proof of the state in which you have money (or a Merkle proof of a valid transaction that sends the money to you). Confident the transaction is finalized, you provide the service.

However, the validators never distribute the full content of the block B to anyone. As such, the honest validators of block C can’t retrieve the block, and are either forced to stall the system or to build on top of A, depriving you as a merchant of money.

When we apply the same scenario to sharding, the definitions of full and light node generally apply per shard: validators in each shard download every block in that shard and validate every transaction in that shard, but other nodes in the system, including those that snapshot shard chains state into the beacon chain, only download the headers. Thus the validators in the shard are effectively full nodes for that shard, while other participants in the system, including the beacon chain, operate as light nodes.

For the fisherman approach we discussed above to work, honest validators need to be able to download blocks that are cross-linked to the beacon chain. If malicious validators cross-linked a header of an invalid block (or used it to initiate a cross-shard transaction), but never distributed the block, the honest validators have no way to craft a challenge.

We will cover two approaches to address this problem that complement each other.

Proof of Custody

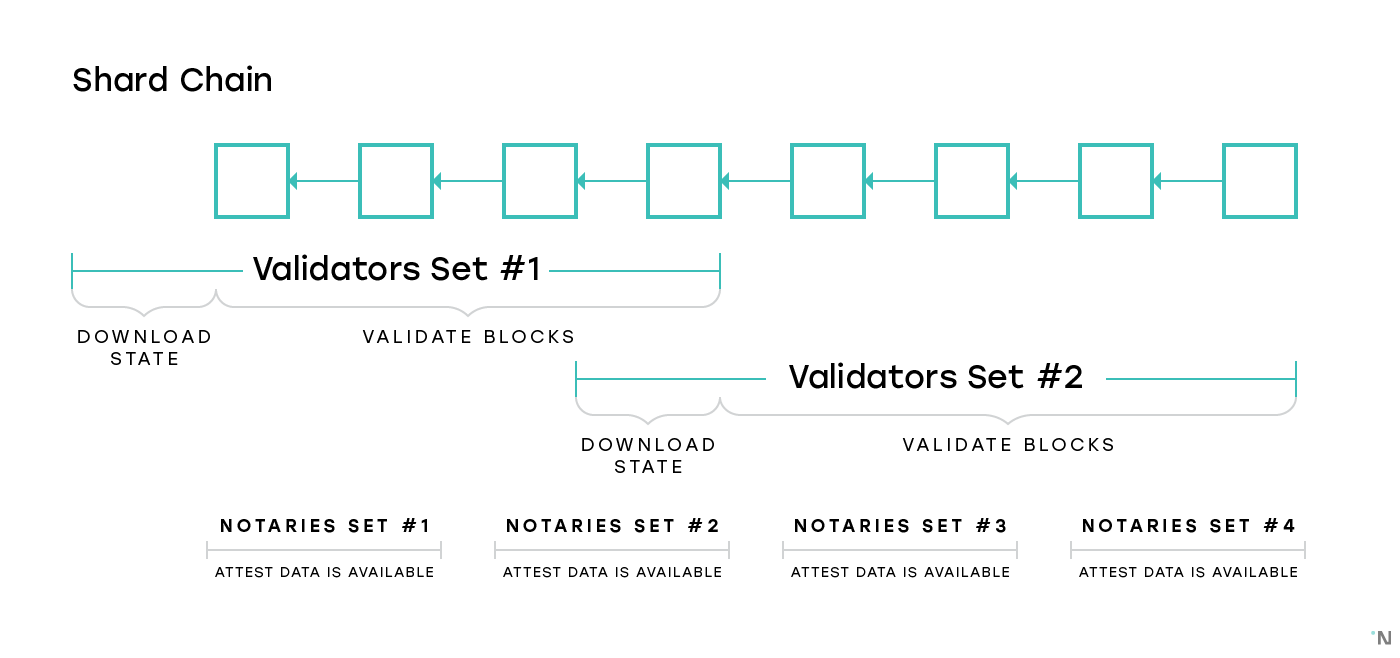

The most immediately problem to be solved is whether a block is available once it is published. One proposed idea is to have so-called Notaries that rotate between shards more often than validators whose only job is to download a block and attest to the fact that they were able to download it. They can be rotated more frequently because they don’t need to download the entire state of the shard, unlike the validators.

The problem with this naive approach is that it is impossible to prove later whether the Notary was or was not able to download the block, so a Notary can choose to always attest that they were able to download the block without even attempting to retrieve it. One solution to this is for Notaries to provide some evidence or to stake some amount of tokens attesting that the block was downloaded. One such solution is discussed here.

Erasure Codes

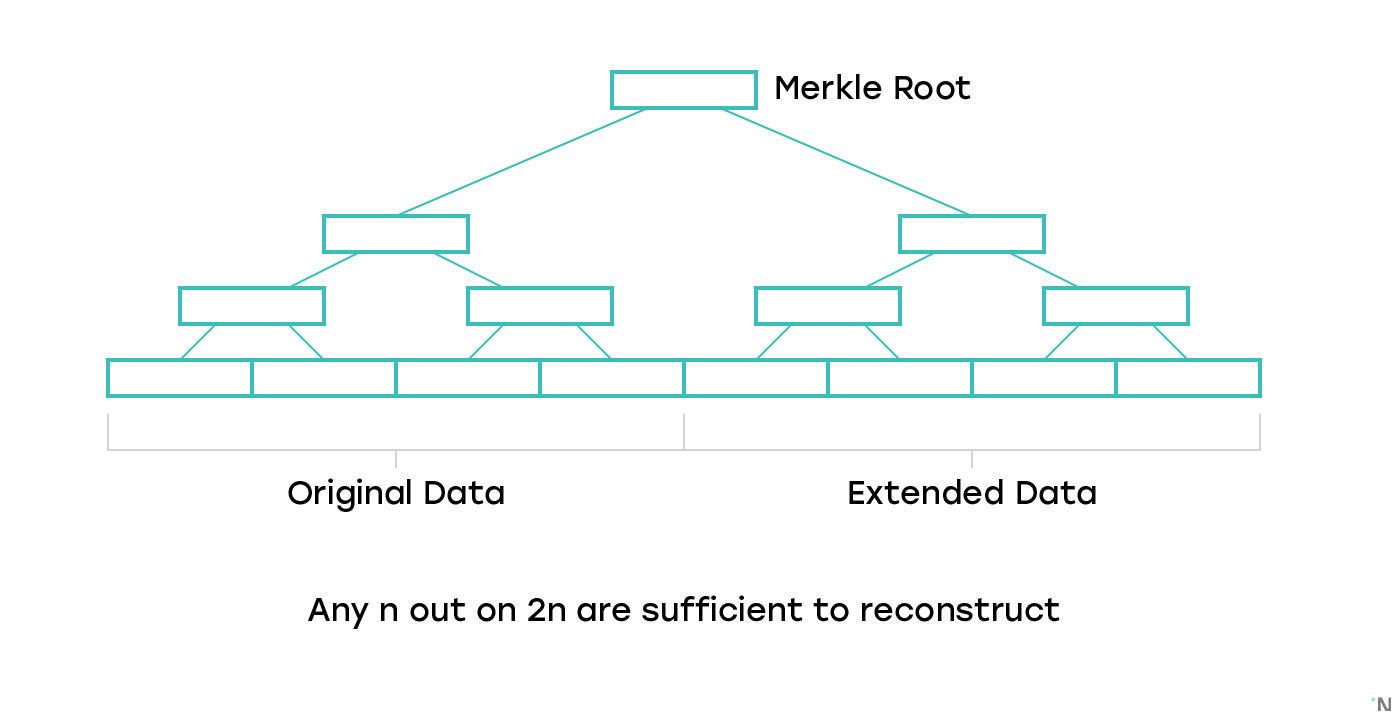

When a particular light node receives a hash of a block, to increase the node’s confidence that the block is available it can attempt to download a few random pieces of the block. This is not a complete solution, since unless the light nodes collectively download the entire block the malicious block producers can choose to withhold the parts of the block that were not downloaded by any light node, thus still making the block unavailable.

One solution is to use a construction called Erasure Codes to make it possible to recover the full block even if only some part of the block is available:

Both Polkadot and Ethereum Serenity have designs around this idea that provide a way for light nodes to be reasonably confident the blocks are available. The Ethereum Serenity approach has a detailed description in this paper. Both approaches rely on challenges, and thus are potentially vulnerable to griefing attacks.

Long term availability, and Conclusion

Note that all the approaches discussed above only attest to the fact that a block was published at all, and is available now. Blocks can later become unavailable for a variety of reasons: nodes going offline, nodes intentionally erasing historical data, and others.

A whitepaper worth mentioning that addresses this issue is Polyshard, which uses erasure codes to make blocks available across shards even if several shards completely lose their data. Unfortunately their specific approach requires all the shards to download blocks from all other shards, which is prohibitively expensive.

Luckily, the long term availability is not as pressing of an issue: since no participant in the system is expected to be capable of validating all the chains in all the shards, the security of the sharded protocol needs to be designed in such a way that the system is secure even if some old blocks in some shards become completely unavailable.

Data validity and data availability remain two problems in designing secure protocols that do not yet have a satisfactory solution. We are actively researching these problems. Stay tuned for updates.

Outro

Near Protocol builds a sharded general purpose blockchain with a huge emphasis on usability. If you like our write-ups, follow us on twitter to learn when we post new content:

If you want to be more involved, join our Discord channel where we discuss all technical and non-technical aspects of Near Protocol, such as consensus, economics and governance:

Near Protocol is being actively developed, and the code is open source, follow our progress on GitHub:

https://upscri.be/633436/

Thanks to Justin Drake from Ethereum Foundation, Alistair Stewart from Polkadot, Zaki Manian from Cosmos Protocol, Monica Quaintance from Kadena Protocol and Dan Robinson from Interstellar for reviewing an early draft of this post and providing feedback.

Share this:

Join the community:

Follow NEAR:

More posts from our blog